Hardware Incident Response: Memory Slot Failure on banshee: Difference between revisions

No edit summary |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

This write-up should be seen as one practical example that may help guide similar interventions in the future or serve as a starting point when assessing next steps in a hardware-related incident. | This write-up should be seen as one practical example that may help guide similar interventions in the future or serve as a starting point when assessing next steps in a hardware-related incident. | ||

== | == Confirmed it's a hardware issue == | ||

In this case we received 2 alerts | In this case we received 2 alerts | ||

1. | 1. | ||

* iDRAC on banshee.idrac.ws.maxmaton.nl reporting critical failure | * iDRAC on banshee.idrac.ws.maxmaton.nl reporting critical failure | ||

| Line 27: | Line 28: | ||

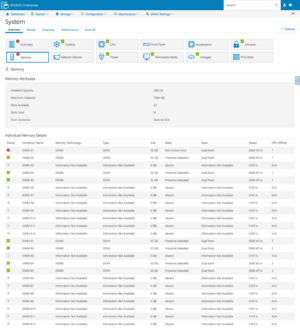

This was also confirmed by checking hardware health on the iDRAC interface: | This was also confirmed by checking hardware health on the iDRAC interface: | ||

[[File:Banshee.idrac.ws.maxmaton.nl restgui index.html 8ce2fb21ce62c14bc4975f040b973a5f(1).png|thumb| | [[File:Banshee.idrac.ws.maxmaton.nl restgui index.html 8ce2fb21ce62c14bc4975f040b973a5f(1).png|thumb|center|alt=Banshee's hardware health on iDRAC|Banshee's hardware health on iDRAC]] | ||

[[File:Image(1).png|thumb|center|alt=Banshee iDRAC logs|Banshee iDRAC logs]] | |||

== Came up with a plan == | |||

Once it was confirmed that the memory issue on banshee was a genuine hardware failure, it was decided that a physical intervention was necessary to replace the faulty memory module. The first step was to migrate all VMs running on Banshee to other available nodes in the Proxmox cluster to avoid service interruption. After ensuring that no critical workloads are running on banshee, the server could be safely shut down in preparation for hardware replacement at the datacenter. | |||

== VMs Migration and Shutdown == | |||

While Proxmox HA is designed to automatically handle VM migrations in the event of node failures, in this case the degraded state of the memory made the bulk migration process unstable, causing the host to crash mid-way through and sending VMs into fencing mode. In hindsight, migrating VMs manually one by one would likely have been a safer strategy. Further technical details of the incident and recovery process can be found in the Zulip conversation. | |||

Things to consider next time: | |||

* Avoid bulk HA-triggered migration if the server is already unstable — migrate VMs manually one at a time | |||

* Verify HA master node is responsive before initiating HA operations | |||

* Test migration procedures on a non-critical VM first | |||

== Intervention at the Datacenter == | |||

Once the replacement memory stick was delivered, we scheduled a physical intervention at the datacenter to carry out the replacement. The goal was to bring the banshee node back online without any hardware issues and reintegrate it into the cluster safely. | |||

To guide the intervention, we used a checklist outlining all the necessary steps — from powering down the machine and replacing the faulty DIMM to validating the memory installation and carefully reintroducing VMs to the node. This helped ensure that each task was executed in the correct order and nothing critical was overlooked. | |||

Note: The checklist we followed is not intended to be a definitive or one-size-fits-all procedure. It should instead be seen as a practical example — a starting point that can be adapted depending on the specific hardware issue, node role, and service criticality involved in future incidents. | |||

* Remove Banshee from HA groups to prevent automatic VM migrations | |||

* Set up maintenance period for Banshee | |||

* Turn Banshee off | |||

* Disconnect Banshee | |||

* Open up Banshee | |||

* Locate and remove the faulty memory stick | |||

* Install the new memory stick in the correct slot | |||

* Record serial numbers of memory sticks in Netbox | |||

* Close Banshee, reconnect power and network, power it on, and connect a monitor | |||

* Enter the Lifecycle Controller and confirm that the memory is detected | |||

* In the Lifecycle Controller, run a memory test: if test fails repeat previous steps with other memory stick | |||

* Migrate a few selected test VMs back to Banshee | |||

* Once the system is stable and VMs are confirmed to run correctly, migrate all intended VMs to Banshee | |||

* Add Banshee back to the original HA groups | |||

* Make sure OSDs come back online | |||

* Remove maintenance period | |||

Latest revision as of 02:31, 8 July 2025

This document outlines how we handled a memory stick failure on the server banshee. It details the actual steps we took, the tools and commands we used, and the reasoning behind our decisions. For full context and team discussions, see the Zulip conversation related to this incident.

⚠️ Important: This is not a universal or comprehensive guide. Hardware failures — including memory issues — can vary widely in symptoms and impact. There may be multiple valid ways to respond depending on the urgency or available resources.

This write-up should be seen as one practical example that may help guide similar interventions in the future or serve as a starting point when assessing next steps in a hardware-related incident.

Confirmed it's a hardware issue

In this case we received 2 alerts

1.

- iDRAC on banshee.idrac.ws.maxmaton.nl reporting critical failure

- Overall System Status is Critical (5)

2.

- Overall System Status is Critical (5)

- Problem with memory in slot DIMM.Socket.A1

To confirm the issue, we logged into the affected server (banshee) and ran the following commands:

journalctl -b | grep -i memory

journalctl -k | grep -i error

We saw multiple entries reporting Hardware Error.

This was also confirmed by checking hardware health on the iDRAC interface:

Came up with a plan

Once it was confirmed that the memory issue on banshee was a genuine hardware failure, it was decided that a physical intervention was necessary to replace the faulty memory module. The first step was to migrate all VMs running on Banshee to other available nodes in the Proxmox cluster to avoid service interruption. After ensuring that no critical workloads are running on banshee, the server could be safely shut down in preparation for hardware replacement at the datacenter.

VMs Migration and Shutdown

While Proxmox HA is designed to automatically handle VM migrations in the event of node failures, in this case the degraded state of the memory made the bulk migration process unstable, causing the host to crash mid-way through and sending VMs into fencing mode. In hindsight, migrating VMs manually one by one would likely have been a safer strategy. Further technical details of the incident and recovery process can be found in the Zulip conversation.

Things to consider next time:

- Avoid bulk HA-triggered migration if the server is already unstable — migrate VMs manually one at a time

- Verify HA master node is responsive before initiating HA operations

- Test migration procedures on a non-critical VM first

Intervention at the Datacenter

Once the replacement memory stick was delivered, we scheduled a physical intervention at the datacenter to carry out the replacement. The goal was to bring the banshee node back online without any hardware issues and reintegrate it into the cluster safely.

To guide the intervention, we used a checklist outlining all the necessary steps — from powering down the machine and replacing the faulty DIMM to validating the memory installation and carefully reintroducing VMs to the node. This helped ensure that each task was executed in the correct order and nothing critical was overlooked.

Note: The checklist we followed is not intended to be a definitive or one-size-fits-all procedure. It should instead be seen as a practical example — a starting point that can be adapted depending on the specific hardware issue, node role, and service criticality involved in future incidents.

- Remove Banshee from HA groups to prevent automatic VM migrations

- Set up maintenance period for Banshee

- Turn Banshee off

- Disconnect Banshee

- Open up Banshee

- Locate and remove the faulty memory stick

- Install the new memory stick in the correct slot

- Record serial numbers of memory sticks in Netbox

- Close Banshee, reconnect power and network, power it on, and connect a monitor

- Enter the Lifecycle Controller and confirm that the memory is detected

- In the Lifecycle Controller, run a memory test: if test fails repeat previous steps with other memory stick

- Migrate a few selected test VMs back to Banshee

- Once the system is stable and VMs are confirmed to run correctly, migrate all intended VMs to Banshee

- Add Banshee back to the original HA groups

- Make sure OSDs come back online

- Remove maintenance period