WS Proxmox node reboot: Difference between revisions

Jump to navigation

Jump to search

(Created page with "## Pre flight checks: * Check all Ceph pools are running on at least 2/3 replication * Check that all running VM's on the node you want to reboot are in HA (if not, add them or migrate them away manually) * Check that Ceph is healthy -> No remapped PG's, or degraded data redundancy ## Reboot process * Start maintenance mode for the Proxmox node and any containers running on the node * Start maintenance mode for Ceph, specify that we only want to surpress the trigger for...") |

No edit summary |

||

| (13 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

== Tips & Notes == | |||

* Check all Ceph pools are running on at least 2 | * If you're expecting to reboot every node in the cluster, do the node with the containers last, to limit the amount of downtime and reboots for them | ||

* Updating a node: `apt update` and `apt full-upgrade` | |||

* Make sure all VMs are actually migratable before adding to a HA group | |||

* If there are containers on the device you are looking to reboot- you are going to need to also create a maintenance mode to cover them (for example teamspeak or awstats) | |||

* Containers will inherit the OS of their host, so you will also need to handle triggers related to their OS updating, where appropriate | |||

== Pre-Work == | |||

* If a VM or container is going to incur downtime, you must let the affected parties know in advance. Ideally they should be informed the previous day. | |||

== Pre-flight checks == | |||

* Check all Ceph pools are running on at least 3/2 replication | |||

* Check that all running VM's on the node you want to reboot are in HA (if not, add them or migrate them away manually) | * Check that all running VM's on the node you want to reboot are in HA (if not, add them or migrate them away manually) | ||

* Check that Ceph is healthy -> No remapped PG's, or degraded data redundancy | * Check that Ceph is healthy -> No remapped PG's, or degraded data redundancy | ||

* You have communicated that downtime is expected to the users who will be affected (Ideally one day in advance) | |||

== Update Process == | |||

* Update the node: `apt update` and `apt full-upgrade` | |||

* Check the packages that are removed/updated/installed correctly and they are the sane (to make sense) | |||

== Reboot process == | |||

* Complete the pre-flight checks | |||

* If you want to reboot for a kernel update, make sure the kernel is updated by following the Update Process written above | |||

* Start maintenance mode for the Proxmox node and any containers running on the node | * Start maintenance mode for the Proxmox node and any containers running on the node | ||

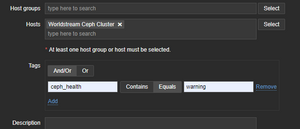

* Start maintenance mode for Ceph, specify that we only want to surpress the trigger for health state being in warning by setting tag `ceph_health` equals `warning` | * Start maintenance mode for Ceph, specify that we only want to surpress the trigger for health state being in warning by setting tag `ceph_health` equals `warning` | ||

* Let affected parties know that the mainenance period you told them about in the preflight checks is about to take place. | |||

[[File:Ceph-maintenance.png|thumb]] | [[File:Ceph-maintenance.png|thumb]] | ||

* Set noout flag on host: `ceph osd set-group noout <node>` | * Set noout flag on host: `ceph osd set-group noout <node>` | ||

# gain ssh access to host | |||

# Log in through IPA | |||

# Run the command | |||

* '''Reboot''' node through web GUI | * '''Reboot''' node through web GUI | ||

* Wait for node to come back up | * Wait for node to come back up | ||

* Wait for OSD's to be back online | * Wait for OSD's to be back online | ||

* Remove noout flag on host: `ceph osd unset-group noout <node>` | * Remove noout flag on host: `ceph osd unset-group noout <node>` ,to do this: | ||

* Ackowledge triggers | * If a kernel update was done, manually execute the `Operating system` item manually to detect the update. Manually executing the two items that indicate a reboot is also usefull if they were firing, to stop them/check no further reboots are needed. | ||

* Ackowledge & close triggers | |||

* Remove maintenance modes | * Remove maintenance modes | ||

== Aftercare == | |||

* Ensure that Kaboom API is running on Screwdriver or Paloma. This is to get the best performance for the VM. | |||

Latest revision as of 05:55, 12 August 2024

Tips & Notes

- If you're expecting to reboot every node in the cluster, do the node with the containers last, to limit the amount of downtime and reboots for them

- Updating a node: `apt update` and `apt full-upgrade`

- Make sure all VMs are actually migratable before adding to a HA group

- If there are containers on the device you are looking to reboot- you are going to need to also create a maintenance mode to cover them (for example teamspeak or awstats)

- Containers will inherit the OS of their host, so you will also need to handle triggers related to their OS updating, where appropriate

Pre-Work

- If a VM or container is going to incur downtime, you must let the affected parties know in advance. Ideally they should be informed the previous day.

Pre-flight checks

- Check all Ceph pools are running on at least 3/2 replication

- Check that all running VM's on the node you want to reboot are in HA (if not, add them or migrate them away manually)

- Check that Ceph is healthy -> No remapped PG's, or degraded data redundancy

- You have communicated that downtime is expected to the users who will be affected (Ideally one day in advance)

Update Process

- Update the node: `apt update` and `apt full-upgrade`

- Check the packages that are removed/updated/installed correctly and they are the sane (to make sense)

Reboot process

- Complete the pre-flight checks

- If you want to reboot for a kernel update, make sure the kernel is updated by following the Update Process written above

- Start maintenance mode for the Proxmox node and any containers running on the node

- Start maintenance mode for Ceph, specify that we only want to surpress the trigger for health state being in warning by setting tag `ceph_health` equals `warning`

- Let affected parties know that the mainenance period you told them about in the preflight checks is about to take place.

- Set noout flag on host: `ceph osd set-group noout <node>`

- gain ssh access to host

- Log in through IPA

- Run the command

- Reboot node through web GUI

- Wait for node to come back up

- Wait for OSD's to be back online

- Remove noout flag on host: `ceph osd unset-group noout <node>` ,to do this:

- If a kernel update was done, manually execute the `Operating system` item manually to detect the update. Manually executing the two items that indicate a reboot is also usefull if they were firing, to stop them/check no further reboots are needed.

- Ackowledge & close triggers

- Remove maintenance modes

Aftercare

- Ensure that Kaboom API is running on Screwdriver or Paloma. This is to get the best performance for the VM.